Deepfakes: AI's Fake Faces & How They're Made + Risks

Is the digital world we inhabit becoming a hall of mirrors, where reality and illusion blur beyond recognition? The rise of deepfakes, powered by sophisticated artificial intelligence, presents a profound challenge to truth and trust, reshaping how we perceive information and interact with the digital landscape.

The genesis of deepfake technology lies in the convergence of "deep learning" and "fake." This deceptively simple term encapsulates a complex technological process that harnesses the power of artificial intelligence to create synthetic media images, videos, and audio that convincingly depict individuals doing or saying things they never actually did. The core mechanism involves pitting two AI algorithms against each other: one, the synthesis engine, generates the fake content, and the other, the discriminator, grades its efforts, constantly refining the process to produce more and more convincing forgeries.

The initial stages of deepfake creation required substantial amounts of training data. This reality meant that celebrities, with their readily available image and video repositories, became the primary targets. It was, and remains, relatively straightforward to train AI models on publicly accessible data of prominent figures to generate synthetic footage. The implications of this are far-reaching, as the potential for misuse extends beyond mere amusement.

Early deepfakes often served the purpose of amusement, but the technology quickly moved beyond simple jokes and into darker territory. The ease with which these forgeries could be created led to their use for unethical purposes, including the creation of fake pornographic videos. The anonymity afforded by the internet and the speed of social media sharing amplified the problem, making it easy for deepfakes to spread rapidly, often before their origins could be verified or debunked.

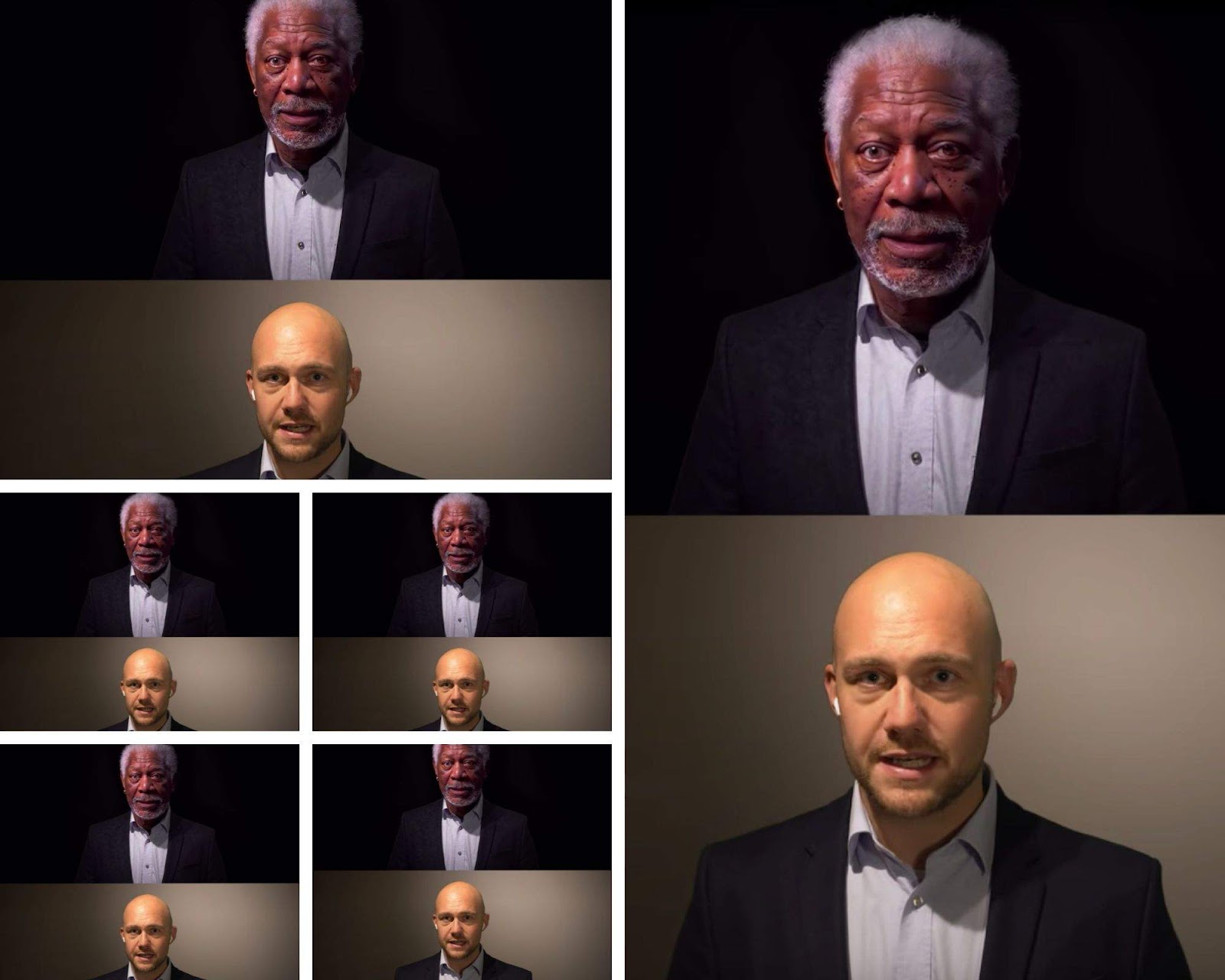

One of the most impactful examples to showcase the capabilities of this technology is the deepfake video featuring the legendary actor Morgan Freeman. Created by a Dutch deepfake YouTube channel, this video showcases the remarkable likeness of Freeman's face superimposed onto another individual's body. This demonstration highlights the potential for sophisticated manipulation that now exists, blurring the lines of what is real and what is not.

The emergence of deepfakes has also complicated the already fraught landscape of online disinformation. They are now being used as "proof" for fake news stories, to discredit celebrities and public figures, and to sow discord and division. The potential for damage is immense, particularly for those whose livelihoods depend on their public image and the integrity of their content. The technology itself is not inherently malicious. However, when placed in the hands of those with ulterior motives, it can be weaponized with devastating consequences.

The rapid proliferation of deepfakes has prompted various responses, including legislative efforts and the development of detection tools. In the United States, the bipartisan "Nurture Originals, Foster Art, and Keep Entertainment Safe" (NO FAKES Act) aims to protect people's voice and visual likeness from unauthorized computer generation. These measures seek to mitigate the harm caused by deepfakes and to establish legal recourse for victims of this form of digital deception.

Here's some quick information on the different aspects of the topic:

| Aspect | Description |

|---|---|

| Definition | Deepfakes are synthetic media (images, videos, audio) created using artificial intelligence, primarily deep learning techniques, to depict individuals doing or saying things they never actually did. |

| Technology | The core technology uses two AI algorithms: a synthesis engine that creates the fake content, and a discriminator that grades the content, leading to iterative refinement. |

| Training Data | Initially, deepfakes required significant training data, making celebrities and public figures the most common targets due to the availability of images and videos. |

| Applications | Deepfakes can be used for entertainment, disinformation, satire, and malicious purposes, including creating fake pornographic videos and discrediting individuals. |

| Impact | Deepfakes pose a threat to trust, truth, and the integrity of information, with potential consequences for individuals, organizations, and society. |

| Detection | Tools and methods are emerging to detect deepfakes, including analyzing inconsistencies in images, videos, and audio, as well as scrutinizing the source and context of the content. |

| Legislative Responses | Various measures are being considered and implemented to regulate deepfakes, including laws to protect individuals' likeness and voices and to penalize malicious creators and distributors. |

| Ethical Concerns | Deepfakes raise ethical questions about privacy, consent, and the manipulation of reality, requiring careful consideration of the potential harms and the establishment of ethical guidelines. |

Despite the efforts to combat it, the spread of deepfakes continues, and has found a unique and troubling niche in the realm of adult entertainment. Websites and online platforms dedicated to creating and distributing celebrity deepfake pornography have emerged, often featuring actresses, YouTubers, Twitch streamers, and other public figures. These platforms exploit the likenesses of these individuals to produce explicit content that they never consented to.

The existence of such platforms raises complex ethical questions. The act of creating and distributing these deepfakes can cause profound harm, including damage to reputations, emotional distress, and potential legal ramifications for both the creators and the distributors of such content.

Moreover, the line between genuine and fabricated content in this context becomes increasingly blurred. The potential for users to mistake deepfakes for real content further complicates the situation, creating confusion and potentially contributing to the spread of misinformation and harmful stereotypes.

Identifying deepfakes is challenging, as the technology advances and creates more realistic content. While it may be obvious to some, the difficulty lies in distinguishing between a genuine piece of media and a meticulously crafted forgery. This problem is illustrated by the case of Katy Perry's mother, who was fooled by a deepfake image. This shows how even those with close knowledge of the subject can be easily deceived.

In an effort to combat the spread of misinformation and harmful content, individuals and organizations are developing techniques to recognize and investigate deepfakes. These include:

- Analyzing inconsistencies: This involves scrutinizing the image or video for any anomalies, such as blurring, unnatural lighting, or distortions that are not consistent with real-world physics.

- Scrutinizing the source and context: This requires verifying the source of the content, examining the context in which it was shared, and evaluating the credibility of the platform or website that distributed the content.

- Looking for signs of manipulation: This involves checking for obvious signs of manipulation, such as the presence of watermarks, the absence of metadata, or any other indicators of tampering.

- Utilizing detection tools: The development of artificial intelligence tools and software that are specifically designed to identify deepfakes is underway.

The evolution of deepfake technology is a complex and multifaceted issue with many implications for the future. Its rise demands critical thinking, vigilance, and the development of countermeasures to protect individuals and safeguard the integrity of information. As the technology continues to evolve, society faces a new set of challenges, requiring collaboration between technology developers, policymakers, and media outlets to address the challenges presented by the age of the deepfake.

As technology improves, the sophistication of deepfakes will only increase, demanding more advanced and multifaceted detection methods. Furthermore, it is crucial to consider the human element. The development of digital literacy and critical thinking skills among the general public will be essential to avoid manipulation.